Thus far I have been playing around with HDFView to extract hdf5 image data – but I am very interested in working with advanced machine learning systems and developing processes to interface them with Unreal Engine and on-line data sources. It seems the most tractable way to do this is through the use of a Python API – which is pretty much the lingua franca of scientific computing.

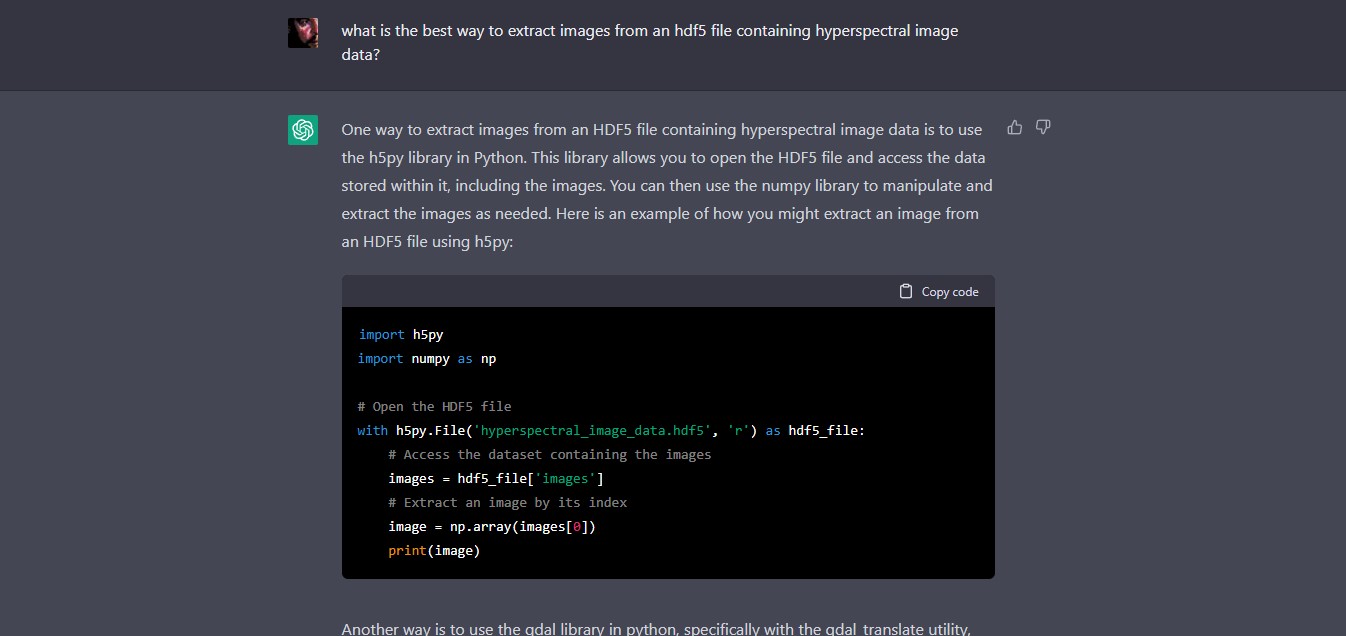

As I am a neophyte with using hyperspectral image data, let’s use ChatGPT to find out what might be an ‘easy’ or ‘best’ way to access image data within an hdf5 file:

ChatGPT:

One way to extract images from an HDF5 file containing hyperspectral image data is to use the h5py library in Python. This library allows you to open the HDF5 file and access the data stored within it, including the images. You can then use the numpy library to manipulate and extract the images as needed. Here is an example of how you might extract an image from an HDF5 file using h5py:

import h5py

import numpy as np# Open the HDF5 filewith h5py.File(‘hyperspectral_image_data.hdf5’, ‘r’) as hdf5_file:

# Access the dataset containing the images

images = hdf5_file[‘images’]

# Extract an image by its index

image = np.array(images[0])

print(image)

Another way is to use the gdal library in python, specifically with the gdal_translate utility, which can convert HDF5 to other raster formats like tiff or geotiff, and then use any image processing library to open and read the image.

gdal_translate -of GTiff HDF5:"file.hdf5"://dataset output.tifIn this way you will be able to use any library of your preference to read and extract the images.

I have no idea currently whether this will work, so lets look up the relevant documentation:

- h5py: The h5py package is a Pythonic interface to the HDF5 binary data format – https://www.h5py.org

- gdal_translate: Converts raster data between different formats. https://gdal.org/programs/gdal_translate.html

Both look promising – and has saved me a heap of time looking for ways to do it!

The first outputs images as a numPy array – meaning that we can examine/export each image by its index – which would be useful for selecting for certain λ (wavelength)) values and conducting operations upon them.

The second uses GDAL (Geospatial Data Abstraction Library), which provides powerful utilities for the translation of geospatial metadata – enabling correct geolocation of the hyperspectral image data, for instance.

So perhaps a combination of both will be useful as we proceed.

But of course, any code generated by chatGPT or OpenAI Codex or other AI assistants must be taken with several grains of salt. For instance – a recent study by MIT shows that users may write more insecure code when working with an AI code assistant (https://doi.org/10.48550/arXiv.2211.03622). Perhaps there are whole API calls and phrases that are hallucinated? I simply don’t know at this stage.

So – my next step will be to fire up a python environment – probably Google Colab or Anaconda and see what happens.

A nice overview of Codex here:

OpenAI Codex Live Demo

No Description