It occurs to me that, rather than going to all the hassle of figuring out how to write all the code for colab myself, that I can use my chatGPT assistant to do it for me:

Q: write a script for google colab to extract images from an hdf5 file located upon my google drive

Chat GPT:

Here is an example script that demonstrates how to extract images from an HDF5 file located on your Google Drive using the h5py library in Python, and the PyDrive library to interact with Google Drive:

# Install the PyDrive library

!pip install -U PyDrive

# Import the necessary libraries

import h5py

import numpy as np

from pydrive.auth import GoogleAuth

from pydrive.drive import GoogleDrive

from google.colab import auth

from oauth2client.client import GoogleCredentials

# Authenticate and create the PyDrive client

auth.authenticate_user()

gauth = GoogleAuth()

gauth.credentials = GoogleCredentials.get_application_default()

drive = GoogleDrive(gauth)

# Get the HDF5 file from your Google Drive

file_id = '<YOUR FILE ID>'

downloaded = drive.CreateFile({'id': file_id})

downloaded.GetContentFile('hyperspectral_image_data.hdf5')

# Open the HDF5 file

with h5py.File('hyperspectral_image_data.hdf5', 'r') as hdf5_file:

# Access the dataset containing the images

images = hdf5_file['images']

# Extract an image by its index

image = np.array(images[0])

print(image)

Make sure to replace <YOUR FILE ID> with the actual file ID of the HDF5 file on your Google Drive. You can find the file ID by opening the file in Google Drive and looking at the URL – the file ID is the string of letters and numbers after the last forward slash.

You can also use the gdal_translate command with the PyDrive library as well, just add the following lines of code to download the file and then use gdal_translate command to convert to tiff or geotiff

# Get the HDF5 file from your Google Drivefile_id = '<YOUR FILE ID>'downloaded = drive.CreateFile({'id': file_id})downloaded.GetContentFile('hyperspectral_image_data.hdf ')#Use gdal_translate!gdal_translate -of GTiff HDF5:"hyperspectral_image_data.hdf5"://dataset output.tifYou can then use any image processing library to open and read the image.

Lots of interesting things to unpack in this advice – and lots of errors as I expect to debug the code.

So, as a first step, let’s see if we can just get access to the file and some basic metadata.

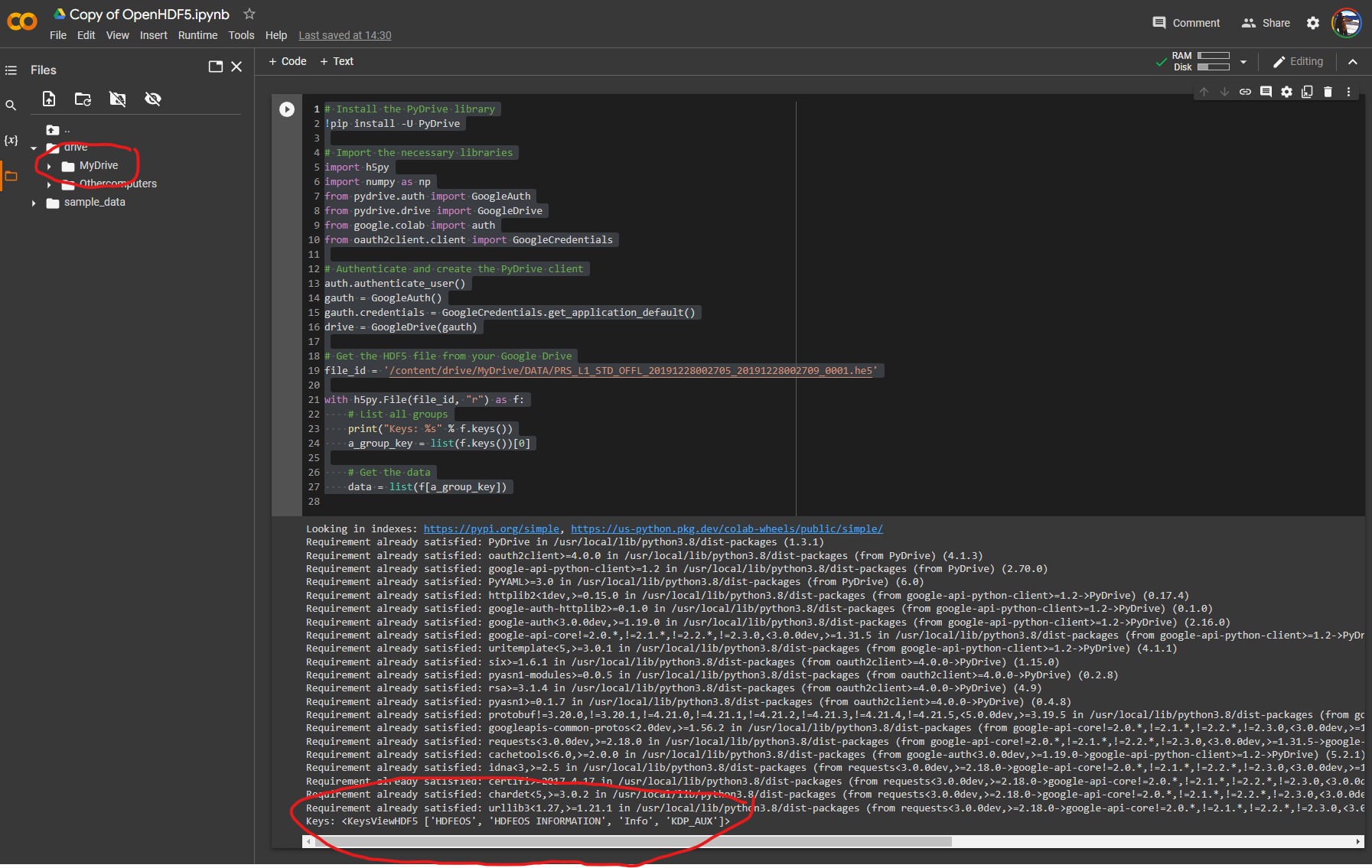

# Install the PyDrive library!pip install -U PyDrive# Import the necessary librariesimport h5pyimport numpy as npfrom pydrive.auth import GoogleAuthfrom pydrive.drive import GoogleDrivefrom google.colab import authfrom oauth2client.client import GoogleCredentials# Authenticate and create the PyDrive clientauth.authenticate_user()gauth = GoogleAuth()gauth.credentials = GoogleCredentials.get_application_default()drive = GoogleDrive(gauth)# Get the HDF5 file from your Google Drivefile_id = '/content/drive/MyDrive/DATA/XXXXXXX.he5'with h5py.File(file_id, "r") as f: # List all groups print("Keys: %s" % f.keys()) a_group_key = list(f.keys())[0] # Get the data data = list(f[a_group_key])

Success! We can see the HDF keys returned below:

Keys: <KeysViewHDF5 ['HDFEOS', 'HDFEOS INFORMATION', 'Info', 'KDP_AUX']>

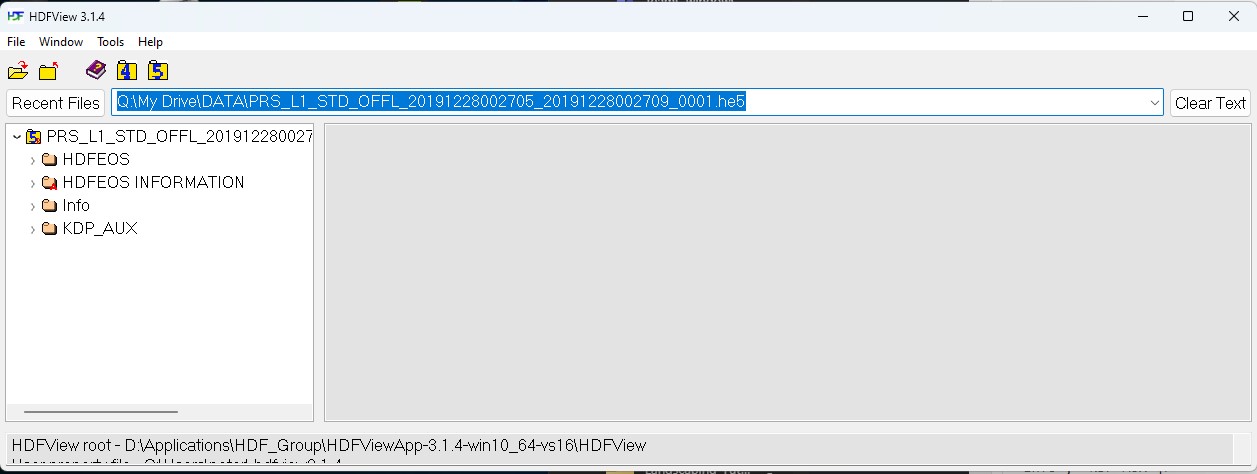

These match the top-level directory structure revealed by HDFView:

This is the first step in identifying the file contents, before we can drill down into the file structure to identify a whole bunch of parameters about the datacube that we can do interesting things with 🙂