Throughout this residency I’ve been working with two development platforms to try and evaluate which is the ‘best'(easiest?optimal?) workflow for the ideas we’ve been exploring. Even at this penultimate stage, things are unclear, so I thought I’d unpack some of the similarities, differences and interactions between the approaches.

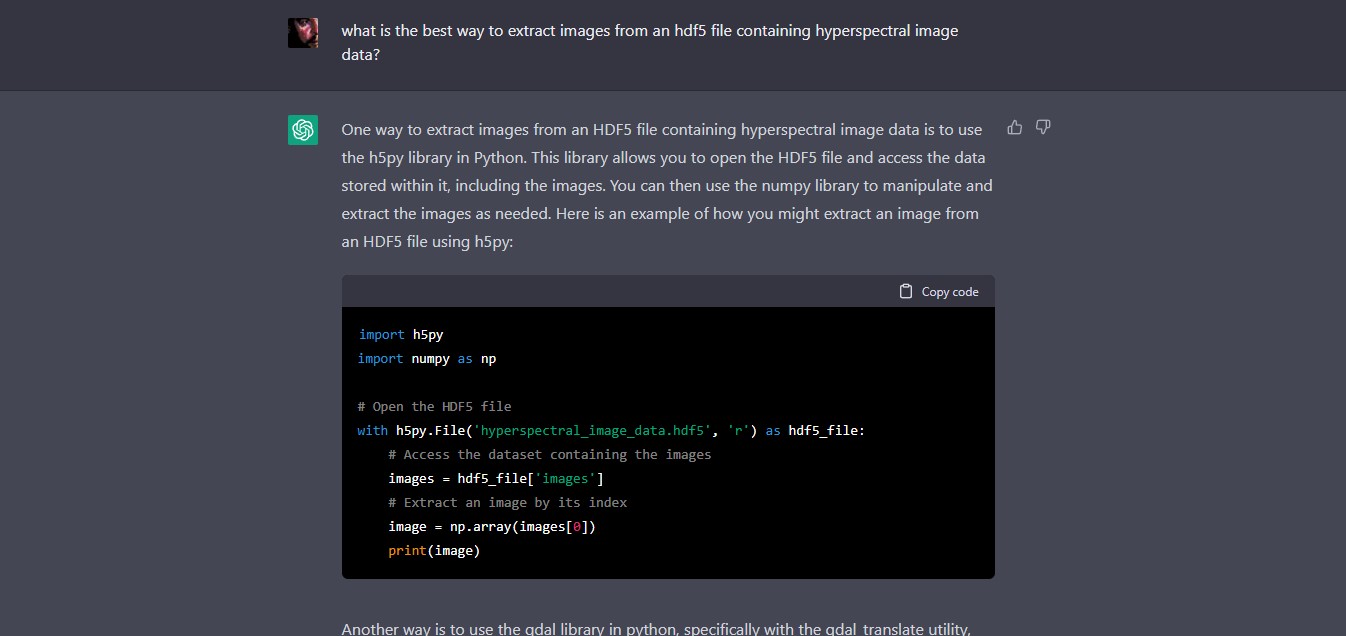

Here’s two takes (after a few unsatisfactory tries) from ChatGPT 4 and Google Bard, using the prompt:

“You are an expert computer artist and programmer. Explain what are the differences and similarities between NVIDIA Omniverse platform and Unreal Engine 5? Create a list of 5 (or so) dot points that clearly indicate when each approach would be the optimal one.”

They’re quite useful – generic (as expected) – and indeterminate because there is so much replication, cross-over and interaction of features.

<aside>reading these responses, I suppose this inevitably speeds the end of some national, regional, alternative and idiomatic versions of English – “ise/ize”, “colour/color”, “mould/mold” etc. </aside>

It seems to come down to a few points that I’ll detail at the end.

GPT4:

As a computer artist and programmer, you are looking at a blend of aesthetics, functionality, and the ability to customize and extend the features of a platform. As of my knowledge cutoff in September 2021, both Unreal Engine 5 and NVIDIA Omniverse have features that will appeal to artists and programmers, albeit with different strengths.

**Unreal Engine 5:**

1. **Game Development**: Unreal Engine 5 is built primarily for the development of games, with a wealth of tools for creating, scripting, and animating game worlds.

2. **Blueprint and C++**: UE5 provides both visual scripting through Blueprint and full source code access with C++, giving you a range of options for scripting and customizing your projects.

3. **Nanite and Lumen**: Nanite allows you to import high-fidelity, cinema-quality art assets directly into the engine, while Lumen provides dynamic, real-time global illumination.

4. **Multi-platform Deployment**: UE5 supports a wide range of platforms, making it ideal for game and application development across devices.

5. **Community and Resources**: The Unreal Engine has a large and active community, which means extensive resources, tutorials, and support.

**Optimal for**: Artists and programmers focused on game development or creating other interactive, real-time experiences. If you need access to source code or want a large community for support, Unreal Engine is a strong choice.

**NVIDIA Omniverse:**

1. **Collaboration and Interoperability**: Omniverse is designed as a platform for collaboration, allowing multiple users to work together in real-time across different applications, thanks to its use of USD (Universal Scene Description).

2. **Physically Accurate Rendering**: Omniverse provides highly accurate, real-time ray tracing rendering with the NVIDIA RTX platform, which is ideal for projects requiring high-quality visualization.

3. **AI Integration**: Omniverse leverages NVIDIA’s AI technologies, offering unique possibilities for AI-enhanced simulation, animation, and more.

4. **Infrastructure Management**: Omniverse can be used to visualize and manage data center infrastructure, an application unique to NVIDIA’s platform.

5. **Integration with other NVIDIA tools**: Omniverse is designed to work seamlessly with other NVIDIA tools, such as Deep Learning Super Sampling (DLSS), enhancing the platform’s capabilities.

**Optimal for**: Collaborative projects requiring real-time contributions across different applications, projects requiring high-fidelity, physically accurate rendering, and tasks involving AI-enhanced graphics or simulation.

Please keep in mind that both platforms continue to evolve and have likely added new features or improvements since my last training data in September 2021.

BARD:

Sure. Here are the similarities and differences between NVIDIA Omniverse platform and Unreal Engine 5:

Similarities

- Both are powerful 3D creation tools.

- Both can be used to create realistic and immersive 3D experiences.

- Both support a wide range of features, including 3D modeling, animation, rendering, and simulation.

- Both are used by a wide range of professionals, including game developers, architects, and filmmakers.

Differences

- NVIDIA Omniverse platform is a more collaborative platform, designed to help teams work together on 3D projects.

- Unreal Engine 5 is a more game-oriented platform, designed to create high-performance, real-time 3D experiences.

- NVIDIA Omniverse platform is currently only available for Windows, while Unreal Engine 5 is available for Windows, macOS, and Linux.

Here are 5 dot points that clearly indicate when each approach would be the optimal one:

- NVIDIA Omniverse platform is the optimal choice for:

- Collaborative 3D projects: The platform’s real-time collaboration features make it easy for teams to work together on 3D projects, regardless of their location.

- Large-scale projects: The platform’s scalability makes it well-suited for large-scale projects that require multiple users and high-performance computing.

- Complex projects: The platform’s powerful tools and features make it well-suited for complex projects that require a high level of detail and realism.

- Unreal Engine 5 is the optimal choice for:

- Game development: The platform’s real-time rendering and performance make it well-suited for developing high-performance, visually stunning games.

- Virtual reality and augmented reality: The platform’s support for VR and AR makes it well-suited for developing immersive experiences.

- Filmmaking: The platform’s powerful tools and features make it well-suited for creating realistic and immersive visual effects for films.

I hope this helps!

It does indeed – thanks Machine Intelligence! 🙂

The main differences are subtle and strategic, and perhaps more effectively understood in the context of industrial competition and the territorialisation of artistic and developer activity – driven by business. It’s basically technological evolution driven by capitalism, not social good or woo-woo philosophical outcomes. Was it ever not thus? Well, yes – opensource provides an important alternative model, but it has significant infrastructural constraints.

Omniverse provides – at a basic level – an incredible toolset that enables the development of bespoke applications for realtime visualisation and simulation. The workflow is quite different to UE, but it provides access to all its features in Python (above) and C++ (below)- and a graph visual language that is easy to use, including standardised UI features. So it is nice and fast, even if it’s a bit crashy at this stage. It’s pretty compelling and I am interested to learn more, especially as it develops in the direction of UI’s for XR streaming across multiple platforms and the integration of AI and physics simulations (e.g, via Paraview). It’s very modular – reminiscent of Opendoc – but not open! Forgotten dreams of a better world. Really, Omniverse could be access-controlled opensource, like Unreal is. And much more free (not only in $$, but in principle).

Of course, all these systems ~could~ be like a better improved version of Opendoc. But $$$ – it’s in their interests, currently, to be non-interoperable.

Unreal Engine is more mature – and much more stable, in my experience. But far more monolithic – perhaps it could be modularised. There is an emergent UI Library, but UE UI (UMG) seems counter-intuitive to me – it’s complex to get your head around (I understand why it is as it is, because of C++ legacy, but feel it needn’t be this way – UI could be an intuitive MVC plastic layer, not casting, binding and widgets).

Cesium runs both in Omniverse and UE – is it possible to create an Omniverse USD scene using Cesium and pipe that into UE using an Omniverse connector or is it best just to use the UE Cesium plugin?

An interesting Omniverse/UE co-simulation here.

It would be nice if UE had ~easy~ realtime server capabilities like Omniverse, without all the asset issues of version control with Git, LFS, Bitbucket, Github, Plastic, Perforce, Subversion etc cross-platform. This is something I need to investigate to find an optimal solution for our use-case. Of course, everything is complicated in a non-commercial research context.

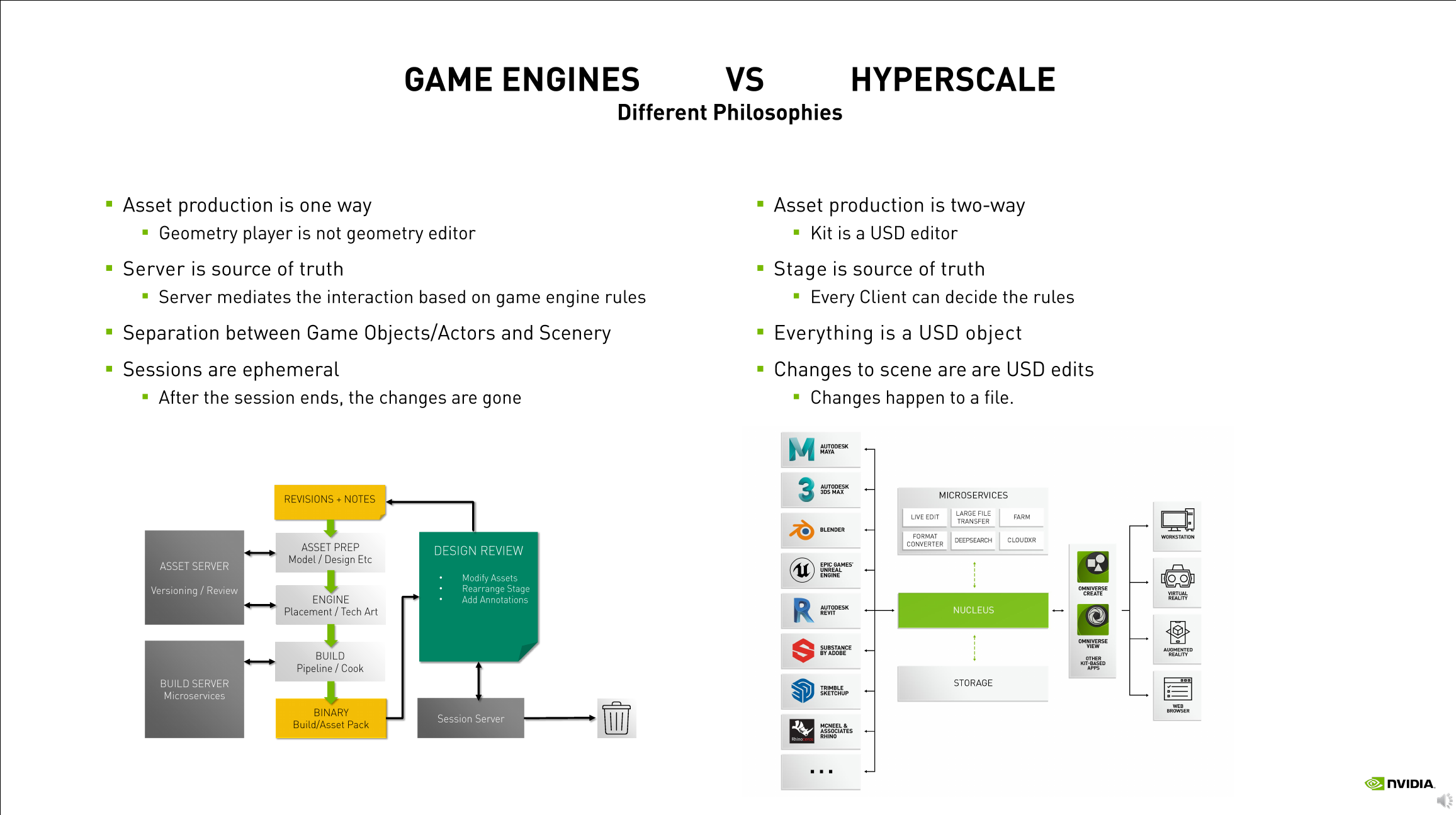

A useful talk comparing the two approaches can be seen here (registration may be required)

https://www.nvidia.com/en-us/on-demand/session/gtcspring22-s42162/

Omer Shapira, a Senior Omniverse Engineer from NVIDIA discusses: “Learn about designing and programming spatial computing features in Omniverse. We’ll discuss Omniverse’s real-time ray-traced extended reality (XR) renderer, Omniverse’s Autograph system for visual programming, and show how customers are using Omniverse’s XR tools to do everything together — from design reviews to hangouts.”

A useful diagram from the PDF slides:

It provides an insightful abstract overview of the relationships between data sources, pipelines and developer/artist/user activity – and, of course, computers. Somewhere there must be cloudy-bits and AI that will soon complicate the picture.

I must try and find the abstract view from an Unreal Engine engineer somewhere – I’m sure the diagram would be different. Nevertheless, the territorial play is the same – a stack from hardware engineering, through software engineering, to ‘experiences’ is basically the monopoly. It comes down to engineering choices – the physical constraints, scientific research, economics and politics of engineering. That defines everything. But it is driven by human desire, a seeming-paradox, top-down but bottom-up.

USD (Universal Scene Description) seems to be on track to be the lingua franca of the metaverse/omniverse/whatevs. A universal file format is an incredibly important idea – not only because there are literally thousands of incompatible, difficult to translate formats, but because of obsolescence. Like human language, digital ‘file language’ evolves and changes; it is currently much more fragile. A significant solar storm might wipe out AI and all digital knowledge. Biology might not be fried in the deep ocean. We’ve had a few billion years of experience.

Nevertheless, the Hyperscale/Game Engine counterpoint is instructive. From my research into Cloud XR, either via Google Immersive Stream or Azure Cloud XR or Amazon etc. – the problem at scale is simply that you need lots and lots of individual virtual machines to run instances of a ‘world’ and lots of low-latency network traffic to synchronise apparent time (with a sprinkling of predictive AI for the ~20ms perceptual lag barrier).

Strangely, this sounds like an ecosystem.

This is clearly a problem that Omniverse tries to address, but won’t work until it is much more open. UE may beat it via the Omniverse of the expanded Fortnight platform, if they can colonise the hardware and mindware at speed. Or they may collaborate or parasitise each other – hard to tell. Are the metaphors appropriate? There are, of course, many other potential players in this space, even, I expect, disruptors or disruptive technologies that have yet to emerge from someone’s loungeroom.

No doubt agentive AGI systems would approach this in entirely different ways, given their own interests.