Over the last couple of weeks I’ve spent some time virtually attending Nvidia’s GTC Developer Conference, which has been very illuminating. The main take-aways for me have been about how, now that we’re in the Age of AI, that it’s time to really start working with cloud services – and that they’re actually becoming affordable for individuals to use.

Of course, like most computer users, I use cloud services every day – most consumer devices already use them – like Netflix, iCloud, AppleTV, Google Drive, Cloudstor, social media etc. These are kind of passive, invisible services that one uses as part of entertainment, information or storage systems. More complicated systems for developers include things like Google Colab, Amazon Web Services (AWS), Microsoft Azure and Nvidia Omniverse, amongst others.

In Australian science programmes there are National Computing Infrastructure (NCI) services such as AuScope Virtual Research Environments, Digital Earth Australia (DEA), Integrated Marine Observing System (IMOS), Australian Research Data Commons (ARDC), and even interesting history and culture applications built atop these such as the Time Layered Cultural Map of Australia (TLCMap). Of course, there are dozens more scattered around various organisations and websites – it’s all quite difficult to discover amidst the acronyms, let alone keep track of, so any list will always be partial and incomplete.

So this is where AI comes in in a strong way – providing the ability to ingest and summarise prodigious volumes of data and information – and hallucinate rubbish – and this is clearly going to be the way of the future. The AI race is on – here are some interesting (but probably already dated) insights from the AI Index by the Stanford Institute for Human-Centered Artificial Intelligence that are worth absorbing:

- Industry has taken over AI development from academia since 2014.

- Performance saturation on traditional benchmarks has become a problem.

- AI can both harm and help the environment, but new models show promise for energy optimization.

- AI is accelerating scientific progress in various fields.

- Incidents related to ethical misuse of AI are on the rise.

- Demand for AI-related skills is increasing across various sectors in the US (and presumably globally)

- Private investment in AI has decreased for the first time in the last decade (but after an astronomical rise in that decade)

- Proportion of companies adopting AI has plateaued, but those who have adopted continue to pull ahead.

- Policymaker interest in AI is increasing globally.

- Chinese citizens are the most positive about AI products and services, while Americans are among the least positive.

Nevertheless, it is clear to me that the so-called ‘Ai pause‘ is not going to happen – as Toby Walsh observes:

“Why? There’s no hope in hell that companies are going to stop working on AI models voluntarily. There’s too much money at stake. And there’s also no hope in hell that countries are going to impose a moratorium to prevent companies from working on AI models. There’s no historical precedent for such geopolitical coordination.

The letter’s call for action is thus hopelessly unrealistic. And the reasons it gives for this pause are hopelessly misguided. We are not on the cusp of building artificial general intelligence, or AGI, the machine intelligence that would match or exceed human intelligence and threaten human society. Contrary to the letter’s claims, our current AI models are not going to “outnumber, outsmart, obsolete and replace us” any time soon.

In fact, it is their lack of intelligence that should worry us”

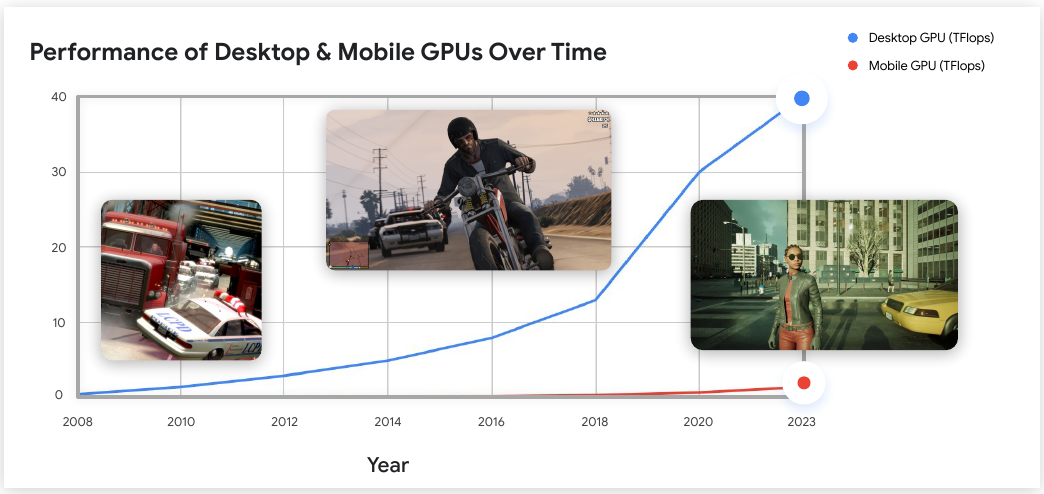

The upshot of this, for the work Chris and I are doing, is clearly that we need to embrace AI-in-XR in the development of novel modalities for Earth Observation using Mixed Reality. It’s simply not going to be very compelling doing something as simple as, for example, visualising data in an XR application (such as an AR phone app) that overlays the scene. I’ve now seen many clunky examples of this, and none of them seem especially compelling, useful or widely adopted. The problem really comes down to the graphics capabilities of mobile devices, as this revealing graph demonstrates:

(screenshot from Developing XR Experiences for Every Device (Presented by Google Cloud) )

A potential solution arrives with XR ‘in the cloud’ – and it is evident from this year’s GTC that all the big companies are making a big play in this space and a lot of infrastructure development is going on – billions of dollars of investment. And it’s not just ‘XR’ but “XR with AI’ and high-fidelity, low-latency pixel-streaming. So, my objective is to ride on the coat-tails of this in a low-budget arty-sciencey way, and make the most of the resources that are now becoming available for free (or low-cost) as these huge industries attempt to on-board developers and explore the design-space of applications.

As you might imagine, it has been frustratingly difficult to find documentation and examples of how to go about doing this, as it is all so new. But this is what you expect with emergent and cutting-edge technologies (and almost everyone trying to make a buck off them) – but it’s thankfully something I am used to from my own practice and research: chaining together systems and workflows in the pursuit of novel outcomes.

It’s been a lot to absorb over the last few weeks, but I’m now at the stage where I can begin implementing an AI agent (using the OpenAI API) that one can query with a voice interface (and yes, it talks back), running within a cloud-hosted XR application suitable for e.g. VR HMDs, AR mobile devices, and mixed-reality devices like the Hololens 2 (I wish I had one!). It’s just a sketch at this stage, but I can see the way forward if/when I can get access to the GPT-4 API and plugin architecture, to creating a kind of Earth Observation ‘Oracle’ and a new modality for envisioning and exploring satellite data in XR.

Currently I’m using OpenAI GPT3.5-turbo and playing around with a local install of GPT4-x_Alpaca and AutoGPT, and local pixel-streaming XR. The next step is to move this over to Azure CloudXR and Azure Cognitive Services. Of course, its all much more complicated than it sounds, so I expect a lot of hiccups along the way, but nothing insurmountable.

I’ll post some technical details and (hopefully) some screencaps in a future post.