ChatGPT (Generative Pre-trained Transformer)[1] is a LLM chatbot launched by OpenAI in November 2022: https://chat.openai.com

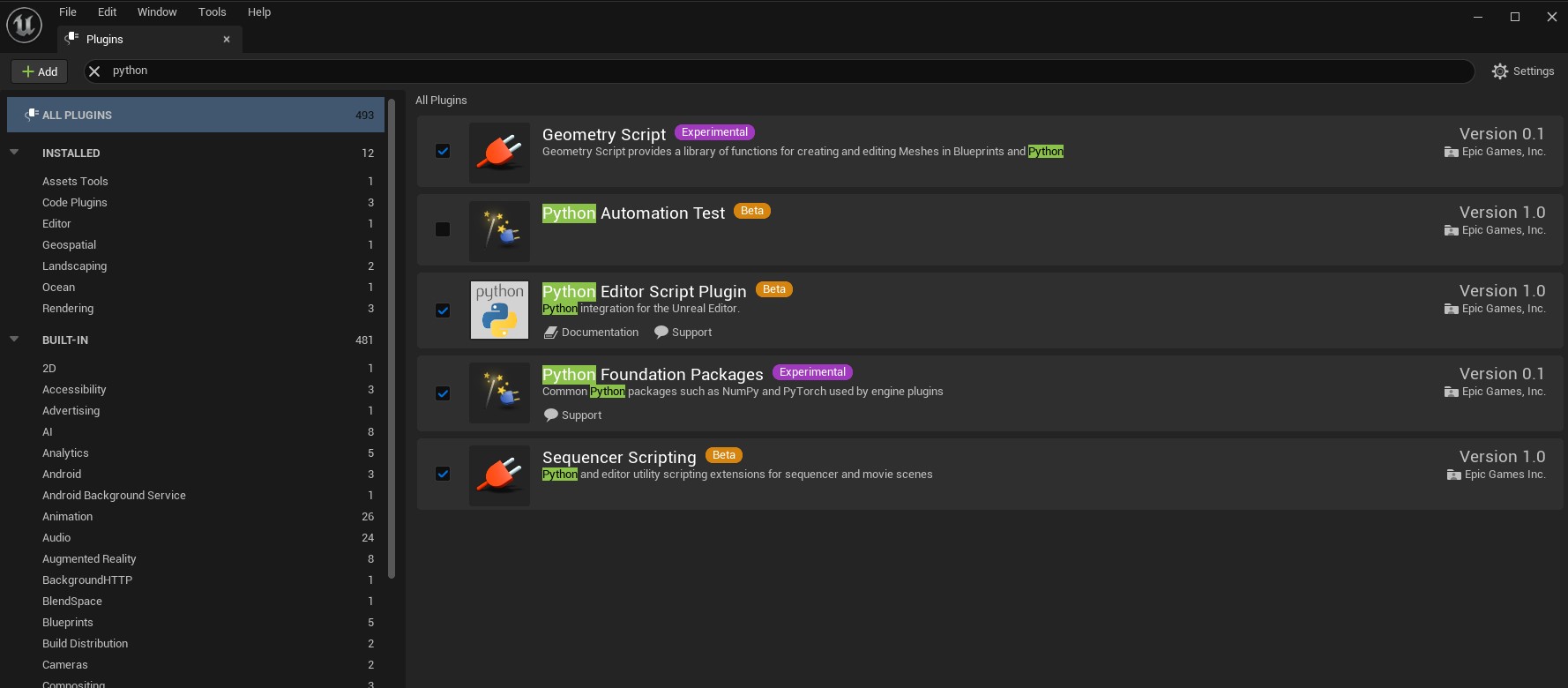

Scripting the Unreal Editor Using Python

An important aspect of our visualisation pipeline involves chaining together Unreal Engine and Python workflows.

The UE 5.1 Python introductory documentation lists some common examples of what might be done:

- Construct larger-scale asset management pipelines or workflows that tie the Unreal Editor to other 3D applications that you use in your organization.

- Automate time-consuming Asset management tasks in the Unreal Editor, like generating Levels of Detail (LODs) for Static Meshes.

- Procedurally lay out content in a Level.

- Control the Unreal Editor from UIs that you create yourself in Python.

Extensive UE Python API documentation is listed here.

A useful introductory video on Youtube:

Technical Webinar: Can satellites monitor crop and pasture quality across Australia?

From the SmartSat CRC Newsletter:

This Technical Presentation hosted by Ha Thanh Nguyen, Research Scientist in Digital Interactions (Agriculture & Food) at CSIRO explores the outcomes of SmartSat Project P3.25: Can satellites monitor crop and pasture quality across Australia?

Knowledge of crop and pasture quality can provide the industry with insights to assist with the grazing management of pastures and input management decisions for crops. Handheld and lab-based spectroscopy have been extensively employed to monitor quality-based plant attributes. The methods employed are time consuming and expensive to implement and do not provide the industry with insights into the temporal trends of the critical variables. High resolution and frequent return time can overcome numerous deficiencies affecting equivalent visible IR and SWIR platforms, that limit the ability to create a viable product around crop and pasture quality. This project conducted a feasibility analysis capitalising on existing and planned satellite missions, including the Aquawatch satellites and precursors to test development of new high frequency products for crop and pasture quality across the Australian landscape. This project is led by Dr Roger Lawes, Principal Research Scientist at CSIRO Agriculture Flagship and includes participants from CSIRO and the Grains Research and Development Corporation. For more information visit https://smartsatcrc.com/research-prog…

HDFView

HDFView is a visual tool written in Java for browsing and editing HDF (HDF5 and HDF4) files: https://www.hdfgroup.org/downloads/hdfview/

Scope: Hyperspectral Imaging, Earth Model, Unreal Engine

Our initial scope is to examine how we can can use hyperspectral satellite data within Unreal Engine (UE).

Hyperspectral Imaging

Wikipedia entry for Hyperspectral Imaging:

Hyperspectral imaging collects and processes information from across the electromagnetic spectrum.[1] The goal of hyperspectral imaging is to obtain the spectrum for each pixel in the image of a scene, with the purpose of finding objects, identifying materials, or detecting processes.[2][3] There are three general branches of spectral imagers. There are push broom scanners and the related whisk broom scanners (spatial scanning), which read images over time, band sequential scanners (spectral scanning), which acquire images of an area at different wavelengths, and snapshot hyperspectral imaging, which uses a staring array to generate an image in an instant.

Whereas the human eye sees color of visible light in mostly three bands (long wavelengths – perceived as red, medium wavelengths – perceived as green, and short wavelengths – perceived as blue), spectral imaging divides the spectrum into many more bands. This technique of dividing images into bands can be extended beyond the visible. In hyperspectral imaging, the recorded spectra have fine wavelength resolution and cover a wide range of wavelengths. Hyperspectral imaging measures continuous spectral bands, as opposed to multiband imaging which measures spaced spectral bands.[4]

Hyperspectral imaging satellites are equipped with special imaging sensors that collect data as a set of raster images, typically visualised as a stack, which each layer representing a discrete wavelength captured by the sensor array.

By Dr. Nicholas M. Short, Sr. - NASA Link

This represents a 3-dimensional hyperspectral data cube, consisting of x,y coordinates that correspond to Earth-coordinates within a defined geodetic reference system, and the λ coordinate which corresponds to spectral wavelength.

Earth Model

An Earth Model means precisely that – a model of the Earth!

More specifically, the Earth is understood as a highly complex three-dimensional shape. It can be simply represented as a sphere or more accurately as an oblate spheroid, representing the diametric differences between equatorial and polar diameters caused by the rotation of the planet.

The scientific study of the shape of the Earth is called Geodesy. Arising from this is the World Geodetic System, about which Wikipedia notes:

The World Geodetic System (WGS) is a standard used in cartography, geodesy, and satellite navigation including GPS. The current version, WGS 84, defines an Earth-centered, Earth-fixed coordinate system and a geodetic datum, and also describes the associated Earth Gravitational Model (EGM) and World Magnetic Model (WMM). The standard is published and maintained by the United States National Geospatial-Intelligence Agency.[1]

Most relevant to creating a digital model of the Earth is the Earth-centred, Earth-fixed coordinate system (or ECEF):

The Earth-centered, Earth-fixed coordinate system (acronym ECEF), also known as the geocentric coordinate system, is a cartesian spatial reference system that represents locations in the vicinity of the Earth (including its surface, interior, atmosphere, and surrounding outer space) as X, Y, and Z measurements from its center of mass.[1][2] Its most common use is in tracking the orbits of satellites and in satellite navigation systems for measuring locations on the surface of the Earth, but it is also used in applications such as tracking crustal motion.

The distance from a given point of interest to the center of Earth is called the geocentric distance, R = (X2 + Y2 + Z2)0.5, which is a generalization of the geocentric radius, R0, not restricted to points on the reference ellipsoid surface. The geocentric altitude is a type of altitude defined as the difference between the two aforementioned quantities: h′ = R − R0;[3] it is not to be confused for the geodetic altitude.

Conversions between ECEF and geodetic coordinates (latitude and longitude) are discussed at geographic coordinate conversion.

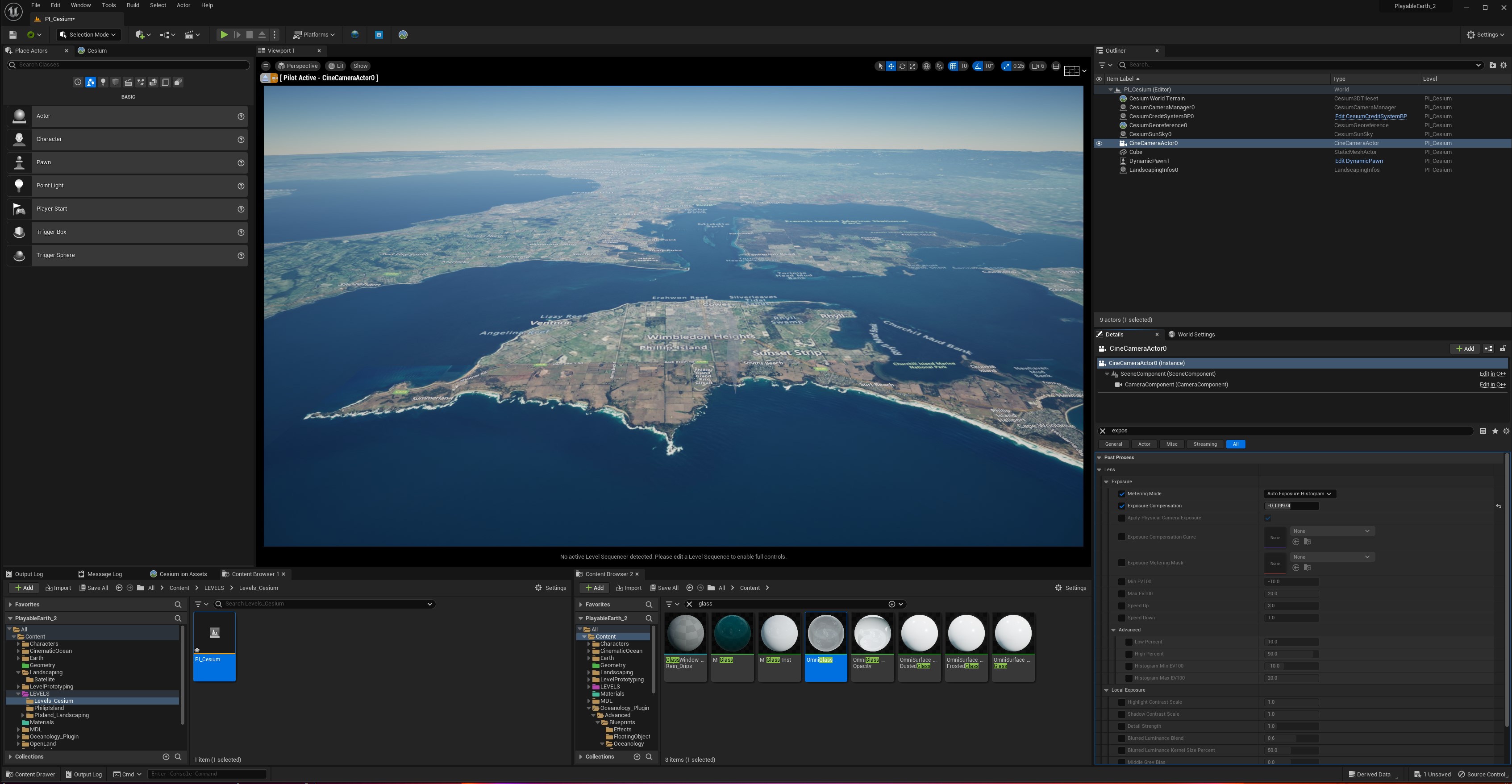

Unreal Engine

Unreal Engine 5.1 provides a comprehensive system for managing ECEF georeferenced data, including ‘flat’ and ’round’ planet projections:

https://docs.unrealengine.com/5.1/en-US/georeferencing-a-level-in-unreal-engine/

In addition to this, there are a variety of plugins available for managing georeferenced data.

For this research we are evaluating two initial approaches:

Cesium for Unreal: https://cesium.com/platform/cesium-for-unreal/

Landscaping Plugin: https://landscaping.ludicdrive.com

More soon.

Introduction

Hi and welcome to our creative research journal for Playable Earth: Disrupting the status quo of Earth Observation Visualisation through immersion and interaction.

I’m going keep this pretty informal – basically a blog with posts about our research progress, with links and references to relevant materials.

Chris and I have been meeting regularly since the beginning of the project in late November 2022, and will continue posting throughout the duration of the project until late June 2023. We’ve agreed to run the project on a 0.5 basis, allowing us to extend the residency from the usual 3-months duration full-time, to ~6-months half-time. This fits in well with our various commitments to other projects and facilitates some cross-hybridisation.

Broadly speaking, the objective of our research is to explore novel ways of using a computer game engine – specifically Unreal Engine – in the deployment and visualisation of Earth Observation data. Our interest is in exploring various aspects of ‘immersive’ visualisation through XR (eXtended Reality). XR is a catch-all abbreviation for an array of technologies including augmented reality (AR) and virtual reality (VR).

An important caveat here is that we need to exemplify the utility of ‘immersive’ media. It’s a vague term that does not explicitly identify the utility of the form – if there is one, beyond obvious entertainment applications. My intuition is that there is, and that it is not terribly complicated – but we need to articulate knowledge about prospective users, human visual perception, semiotics and human-computer interaction as well. More about this in a later post.

Modern computer game engines provide a robust platform for this kind of research, subtended by the huge financial resources of the games industry and its investment in the technological infrastructure of software and compute resources – that are developing at such a startling rate. Interaction with advanced machine learning systems – AI – is obvious and imperative. It makes sense to use them for sciartistic visualisation, as they provide many useful features consolidated within a systematic approach to programming, content development and human-computer interaction (HCI).

Some difficulties arise in interfacing data formats and approaches commonly used by creative industries technologies (CIT), with more specialised forms used by scientific visualisation and visual analytics.

Furthermore, game engines can enable the development of novel aesthetic approaches towards visualisation, that often fall outside the remit of strictly ‘scientific’ visualisation conventions. Thus a prospectively fertile intersection of art and science emerges – they can become much more conversant modalities for eliciting knowledge and insight about the world.

As an artist who has worked extensively with Unreal Engine on a number of personal projects, as well as someone with a PhD in computational geophysics, focusing upon scientific data visualisation, the opportunity to work with Chris and the Smartsat CRC at Swinburne is opportune and exciting – truly a way to draw together these strands of art and science in some blue-sky research.

We also express our gratitude to the Australian Network for Art and Technology (ANAT), CEO Melissa Delaney and her staff for facilitating this novel approach to supporting this research – we hope it paves the way for future initiatives of this type.

Playable Earth :: ANAT Bespoke Residency

Playable Earth

Disrupting the status quo of Earth Observation Visualisation through immersion and interaction.

This project aims to explore novel ways of visualising and interacting with satellite-originated Earth observation data and analytics using modern game development environments. The goal is to develop novel modalities for extended reality (XR) display and interrogation of selected data, including near real-time observations. The project will be open-ended and speculative, and will document its progress through this creative research journal and video demonstrations. It will also consider the potential of using platforms such as the National Broadband Network and 5G to distribute scientific knowledge and enquiry. The project will be informed by the Capability Demonstrators under development by the SmartSat Cooperative Research Centre, which focus on enhancing disaster resilience, water quality monitoring and situational awareness across the Indian, Pacific and Southern Ocean regions.

ANAT Bespoke Residency

This is the first pilot bespoke residency established between ANAT and a host institution. The residency is hosted by the SmartSat CRC at the Swinburne University Centre for Astrophysics and Supercomputing.

People:

Dr Peter Morse (Computational researcher and experimental media artist)

Bio: https://www.petermorse.com.au/about/

Prof Christopher Fluke (SmartSat Professorial Chair, Swinburne University of Technology)